Nodejs学习笔记(十一)--- 数据采集器示例(request和cheerio)

目录

写在之前

很多人都有做数据采集的需求,用不同的语言,不同的方式都能实现,我以前也用C#写过,主要还是发送各类请求和正则解析数据比较繁琐些,总体来说没啥不好的,就是效率要差一些,

用nodejs写采集程序还是比较有效率(可能也只是相对C#来说),今天主要用一个示例来说一下使用nodejs实现数据采集器,主要使用到request和cheerio。

request :用于http请求

https://github.com/request/request

cheerio:用于提取request返回的html中需要的信息(和jquery用法一致)

https://github.com/cheeriojs/cheerio

示例

单独去说API用法没什么意思也没必要记住全部API,下面开始示例

还是说点闲话:

nodejs开发工具还是很多,以前我也很推荐sublime,自从微软推出了Visual Studio Code后就转用它去做nodejs开发。

用它开发还是比较舒服的,免配置、启动快、自动补全、查看定义和引用、搜索快等,有VS的一贯风格,应该会越做越好,所以推荐一下^_^!

从 http://36kr.com/ 中抓取其中文章的“标题”、“地址”、“发布时间”、“封面图片”

1.建立项目文件夹sampleDAU

2.建立package.json文件

{

"name": "Wilson_SampleDAU",

"version": "0.0.1",

"private": false,

"dependencies": {

"request":"*",

"cheerio":"*"

}

}

3.在终端中用npm安装引用

cd 项目根目录 npm install

4.建立app.js编写采集器代码

首先要用浏览器打开要采集的URL,使用开发者工具查看HTML结构,然后根据结构写解析代码

/*

* 功能: 数据采集

* 创建人: Wilson

* 时间: 2015-07-29

*/

var request = require('request'),

cheerio = require('cheerio'),

URL_36KR = 'http://36kr.com/'; //36氪

/* 开启数据采集器 */

function dataCollectorStartup() {

dataRequest(URL_36KR);

}

/* 数据请求 */

function dataRequest(dataUrl)

{

request({

url: dataUrl,

method: 'GET'

}, function(err, res, body) {

if (err) {

console.log(dataUrl)

console.error('[ERROR]Collection' + err);

return;

}

switch(dataUrl)

{

case URL_36KR:

dataParse36Kr(body);

break;

}

});

}

/* 36kr 数据解析 */

function dataParse36Kr(body)

{

console.log('============================================================================================');

console.log('======================================36kr==================================================');

console.log('============================================================================================');

var $ = cheerio.load(body);

var articles = $('article')

for (var i = 0; i < articles.length; i++) {

var article = articles[i];

var descDoms = $(article).find('.desc');

if(descDoms.length == 0)

{

continue;

}

var coverDom = $(article).children().first();

var titleDom = $(descDoms).find('.info_flow_news_title');

var timeDom = $(descDoms).find('.timeago');

var titleVal = titleDom.text();

var urlVal = titleDom.attr('href');

var timeVal = timeDom.attr('title');

var coverUrl = coverDom.attr('data-lazyload');

//处理时间

var timeDateSecs = new Date(timeVal).getTime() / 1000;

if(urlVal != undefined)

{

console.info('--------------------------------');

console.info('标题:' + titleVal);

console.info('地址:' + urlVal);

console.info('时间:' + timeDateSecs);

console.info('封面:' + coverUrl);

console.info('--------------------------------');

}

};

}

dataCollectorStartup();

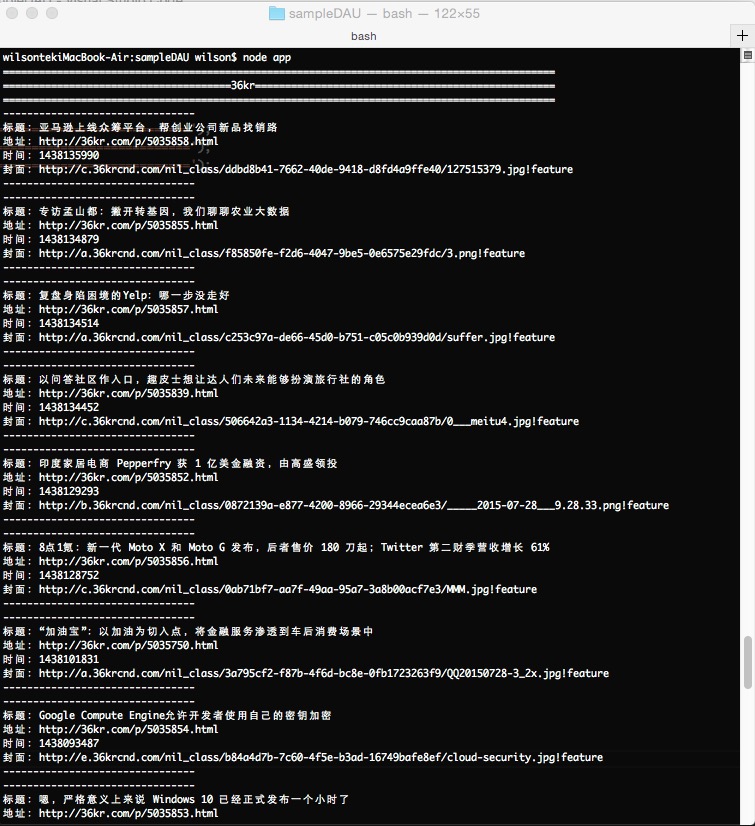

测试结果

这个采集器就完成了,其实就是request一个get请求,请求回调中会返回body即HTML代码,通过cheerio库以jquery库语法一样操作解析,取出想要的数据!

加入代理

做一个采集器DEMO上面就基本完成了。如果需要长期使用为了防止网站屏蔽,还是需要加入一个代理列表

为示例我从网上的免费代理中提出一些做示例,制作成proxylist.js,其中提供一个随机取一条代理的函数

var PROXY_LIST = [{"ip":"111.1.55.136","port":"55336"},{"ip":"111.1.54.91","port":"55336"},{"ip":"111.1.56.19","port":"55336"}

,{"ip":"112.114.63.16","port":"55336"},{"ip":"106.58.63.83","port":"55336"},{"ip":"119.188.133.54","port":"55336"}

,{"ip":"106.58.63.84","port":"55336"},{"ip":"183.95.132.171","port":"55336"},{"ip":"11.12.14.9","port":"55336"}

,{"ip":"60.164.223.16","port":"55336"},{"ip":"117.185.13.87","port":"8080"},{"ip":"112.114.63.20","port":"55336"}

,{"ip":"188.134.19.102","port":"3129"},{"ip":"106.58.63.80","port":"55336"},{"ip":"60.164.223.20","port":"55336"}

,{"ip":"106.58.63.78","port":"55336"},{"ip":"112.114.63.23","port":"55336"},{"ip":"112.114.63.30","port":"55336"}

,{"ip":"60.164.223.14","port":"55336"},{"ip":"190.202.82.234","port":"3128"},{"ip":"60.164.223.15","port":"55336"}

,{"ip":"60.164.223.5","port":"55336"},{"ip":"221.204.9.28","port":"55336"},{"ip":"60.164.223.2","port":"55336"}

,{"ip":"139.214.113.84","port":"55336"} ,{"ip":"112.25.49.14","port":"55336"},{"ip":"221.204.9.19","port":"55336"}

,{"ip":"221.204.9.39","port":"55336"},{"ip":"113.207.57.18","port":"55336"} ,{"ip":"112.25.62.15","port":"55336"}

,{"ip":"60.5.255.143","port":"55336"},{"ip":"221.204.9.18","port":"55336"},{"ip":"60.5.255.145","port":"55336"}

,{"ip":"221.204.9.16","port":"55336"},{"ip":"183.232.82.132","port":"55336"},{"ip":"113.207.62.78","port":"55336"}

,{"ip":"60.5.255.144","port":"55336"} ,{"ip":"60.5.255.141","port":"55336"},{"ip":"221.204.9.23","port":"55336"}

,{"ip":"157.122.96.50","port":"55336"},{"ip":"218.61.39.41","port":"55336"} ,{"ip":"221.204.9.26","port":"55336"}

,{"ip":"112.112.43.213","port":"55336"},{"ip":"60.5.255.138","port":"55336"},{"ip":"60.5.255.133","port":"55336"}

,{"ip":"221.204.9.25","port":"55336"},{"ip":"111.161.35.56","port":"55336"},{"ip":"111.161.35.49","port":"55336"}

,{"ip":"183.129.134.226","port":"8080"} ,{"ip":"58.220.10.86","port":"80"},{"ip":"183.87.117.44","port":"80"}

,{"ip":"211.23.19.130","port":"80"},{"ip":"61.234.249.107","port":"8118"},{"ip":"200.20.168.140","port":"80"}

,{"ip":"111.1.46.176","port":"55336"},{"ip":"120.203.158.149","port":"8118"},{"ip":"70.39.189.6","port":"9090"}

,{"ip":"210.6.237.191","port":"3128"},{"ip":"122.155.195.26","port":"8080"}];

module.exports.GetProxy = function () {

var randomNum = parseInt(Math.floor(Math.random() * PROXY_LIST.length));

var proxy = PROXY_LIST[randomNum];

return 'http://' + proxy.ip + ':' + proxy.port;

}

对app.js代码做如下修改

/*

* 功能: 数据采集

* 创建人: Wilson

* 时间: 2015-07-29

*/

var request = require('request'),

cheerio = require('cheerio'),

URL_36KR = 'http://36kr.com/', //36氪

Proxy = require('./proxylist.js');

...

/* 数据请求 */

function dataRequest(dataUrl)

{

request({

url: dataUrl,

proxy: Proxy.GetProxy(),

method: 'GET'

}, function(err, res, body) {

...

}

}

...

dataCollectorStartup() setInterval(dataCollectorStartup, 10000);

这样就改造完成,加入代码,并且加了setInterval进行定间隔执行!

请求https

上面示例中采集http请求,如果换成https呢?

新建app2.js,代码如下

/*

* 功能: 请求HTTPS

* 创建人: Wilson

* 时间: 2015-07-29

*/

var request = require('request'),

URL_INTERFACELIFE = 'https://interfacelift.com/wallpaper/downloads/date/wide_16:10/';

/* 开启数据采集器 */

function dataCollectorStartup() {

dataRequest(URL_INTERFACELIFE);

}

/* 数据请求 */

function dataRequest(dataUrl)

{

request({

url: dataUrl,

method: 'GET'

}, function(err, res, body) {

if (err) {

console.log(dataUrl)

console.error('[ERROR]Collection' + err);

return;

}

console.info(body);

});

}

dataCollectorStartup();

执行会发现返回body中什么也没有^_^!

加入一些代码再看看

/*

* 功能: 请求HTTPS

* 创建人: Wilson

* 时间: 2015-07-29

*/

var request = require('request'),

URL_INTERFACELIFE = 'https://interfacelift.com/wallpaper/downloads/date/wide_16:10/';

/* 开启数据采集器 */

...

/* 数据请求 */

function dataRequest(dataUrl)

{

request({

url: dataUrl,

method: 'GET',

headers: { 'User-Agent': 'wilson' }

}, function(err, res, body) {

if (err) {

console.log(dataUrl)

console.error('[ERROR]Collection' + err);

return;

}

console.info(body);

});

}

...

再执行,你会发现body中返回请求的HTML!(结果就不放上来了,自已执行一下!)

详细的请看:https://github.com/request/request#custom-http-headers

写在之后

离上一篇快半年了^_^! 最近有计划写几篇操作类型的,不讲原理也不通讲API,只讲实例!

request库我还是推荐API可以多看看,比如Forms部分我就在实际项目测试中用的比较多!

比如做接口测试:

1.提交两个参数(参数1:字符串 参数2:数字)

request.post({url:'接口URL',form: {参数一名称:'参数一值',参数二名称:参数二值},function(err,res,body){

if(err)

{

return;

}

console.log(body);

});

body就是接口返回

2.提交一个字符串参数,提交一个文件参数(比如上传头像等)

var r = request.post('接口URL',function(err,res,body){

if(err)

{

return;

}

console.log(body);

});

var form = r.form();

form.append('参数一名称', '参数一值');

form.append('参数二名称', fs.createReadStream('1.jpg'), {filename: '1.jpg'});

cheerio库真没什么好讲的,会jquery就行,它库的api基本都不用看!